PSO

Due to the number of parameters and their interdependency, optimization and tuning parameters of semiempirical quantumchemical methods by hand is not possible. For this, algorithms for such multi-dimensional problems need to be employed. In contrast to, e.g., geometry optimizations, the gradients of the parameter space are not known, therefore only numerical optimizations2 can be used. Such methods do not need any prior information about the parameter space and can be used for basically any optimization task. One example would be the simplex/simulated annealing (SIMPSA) algorithm, which was used for the azobenzene-specific parameter optimization.

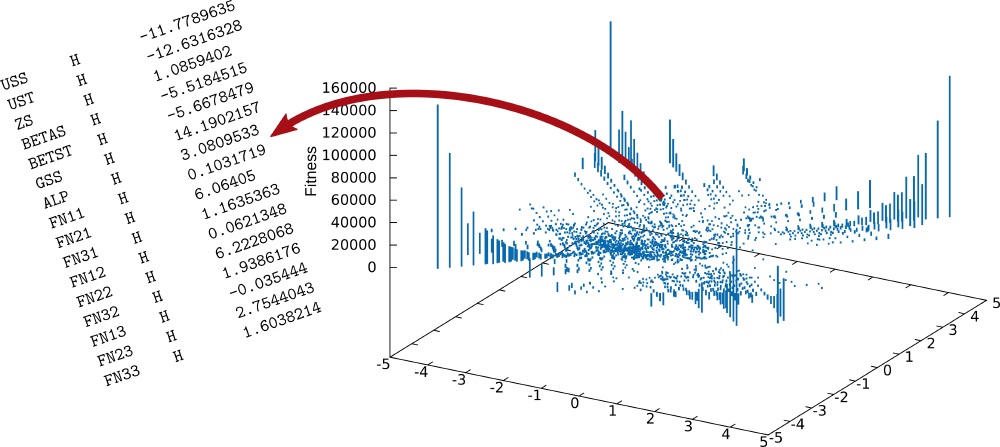

In my thesis I used a different type of method, namely particle swarm optimization (PSO). In a PSO, as the name suggest, a swarm of individual particles is propagated on an n-dimensional surface to find its global minimum. The height of the surface is given by a function, which evaluates the “fitness” of a particle at its coordinates on the surface. Since no information about the surface is available at the start of the propagation, no gradient can be followed towards a minimum. Therefore, the driving-force of the particle is communication and memorization. A particle is able to remember its own position at lowest height – best fitness – and can exchange this information with particles in the vicinity – “neighborhood”. With this information – and some random perturbation – the particle will move along a vector that is a sum of the vectors towards its own and the best known fitness of the neighborhood.

Because PSO is a non-deterministic global optimization method the chance of finding the “best solution” in a given problem space is never 100% within a finite number of iterations. Rigorously increasing the iteration number can help but is usually not an option i) because of the computational cost per fitness evaluation (see below) and ii) because there is no guarantee that every iteration reaches points in the problem space that were never accessed before. Thus, covering the complete problem space is not feasible with non-deterministic methods. The only resort is to start many optimization runs with varying initial conditions and to see if they show a tendency to converge to the same solution. In contrast, deterministic optimization algorithms will always find the best possible solution, but are only feasible for a small number of dimensions due to an exponential scaling in computational effort.

The PSO as presented in my thesis was written in Perl, mainly because of the reason it is my language of choice when it comes to automation on the computer. However, any other programming/scripting language may be suited for the PSO. This is because the computational demand of the algorithm itself is relatively low. The core of the PSO algorithm just performs some vector additions, so there is no need to employ an object-oriented or close-to- hardware-level language.